Working Principle of pH Meter

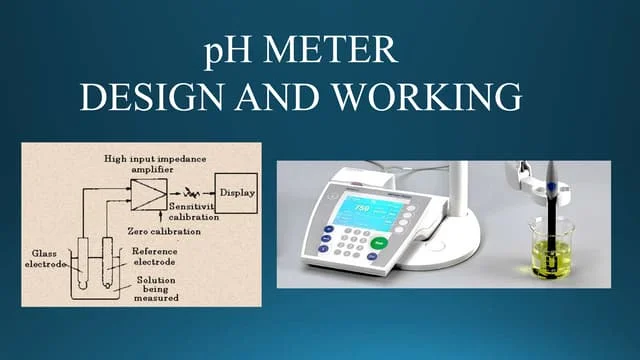

Working Principle of a pH Meter

Definition

A pH meter is an analytical instrument used to measure the hydrogen ion concentration ([H⁺]) in a solution, expressing it as pH (potential of hydrogen).

Principle

-

A pH meter works on the electrochemical principle of measuring the electromotive force (EMF) between two electrodes:

-

Glass Electrode (measures H⁺ activity in the test solution)

-

Reference Electrode (provides a stable reference potential, e.g., silver/silver chloride electrode)

-

-

The EMF generated depends on the difference in hydrogen ion activity between the test solution and the reference electrode.

-

The meter uses the Nernst equation to convert this voltage into pH:

pH=Eref−Emeasured2.303×R×T/F\text{pH} = \frac{E_{\text{ref}} – E_{\text{measured}}}{2.303 \times R \times T / F}

Where:

-

R = Gas constant (8.314 J/mol·K)

-

T = Absolute temperature (K)

-

F = Faraday’s constant (96485 C/mol)

At 25°C, the slope is approximately 59.16 mV per pH unit.

Working Steps

-

Calibration using standard buffer solutions (e.g., pH 4.00, 7.00, 10.00).

-

Immersion of the electrode system into the sample solution.

-

Measurement of EMF generated due to H⁺ concentration difference.

-

Display of pH value on the meter after electronic conversion.

Key Points for Accurate pH Measurement

-

Keep the glass electrode hydrated (store in KCl solution, not distilled water).

-

Avoid contamination of buffers and samples.

-

Calibrate regularly (especially before critical measurements).

-

Compensate for temperature effects (automatic or manual).